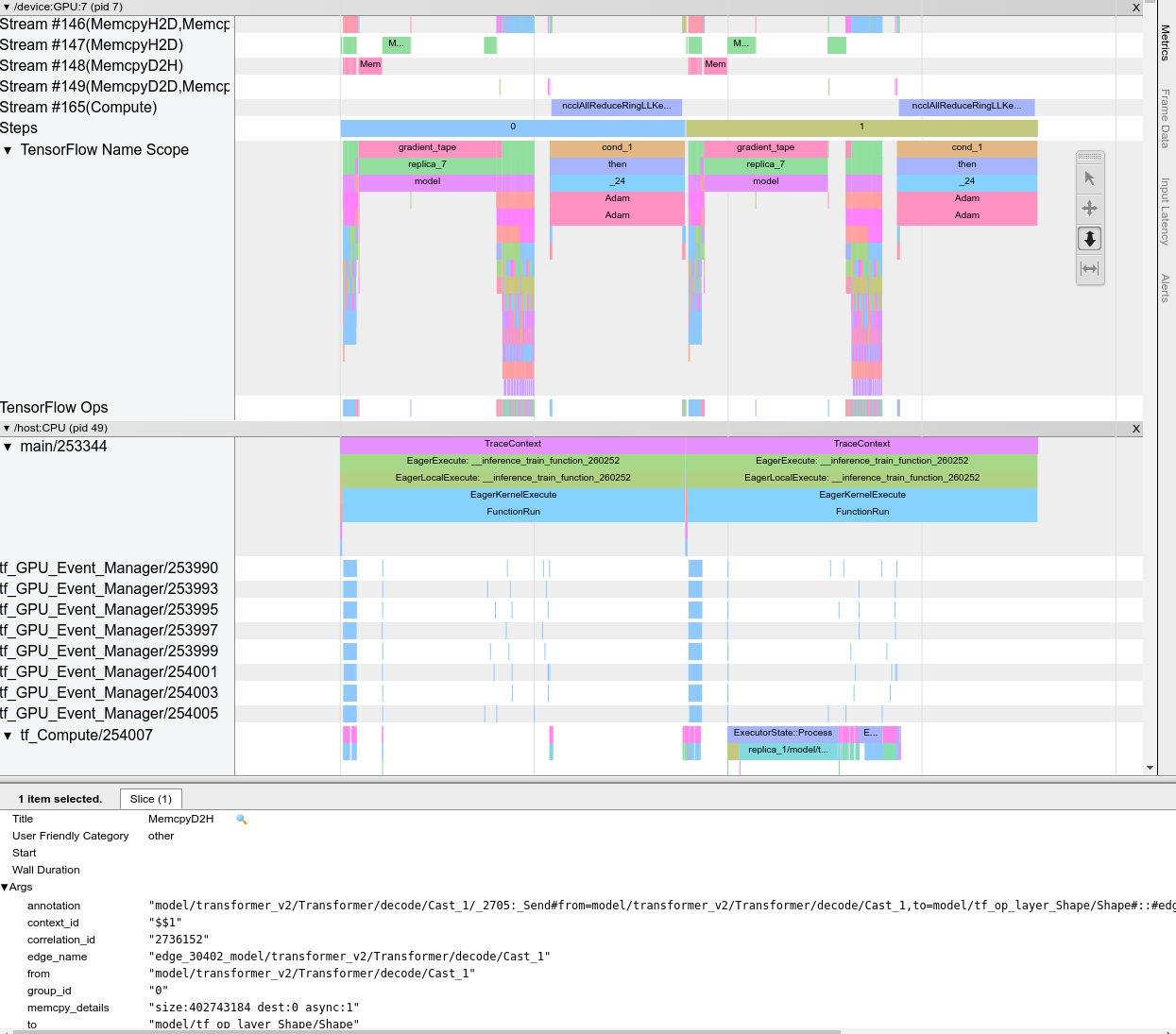

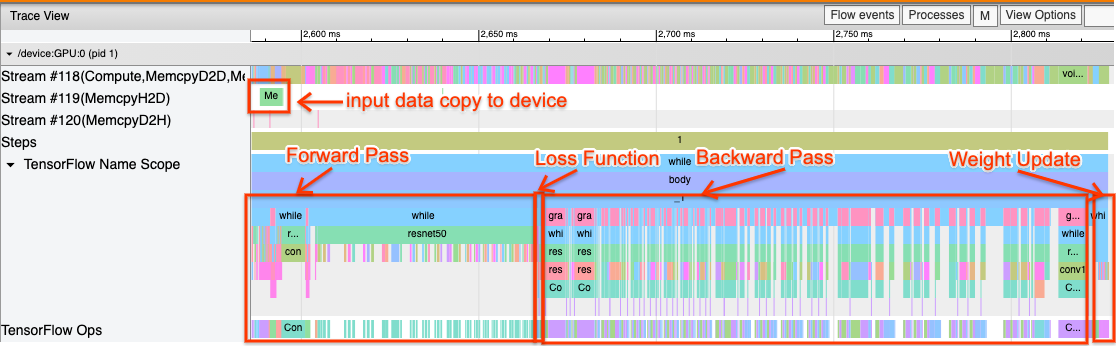

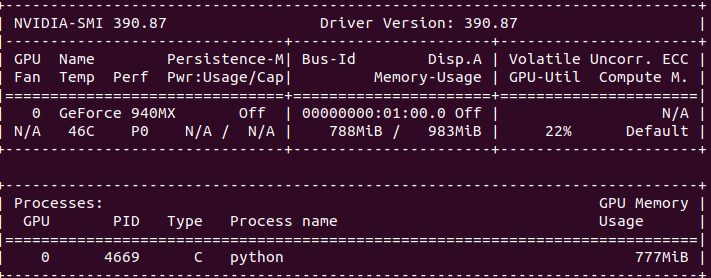

How to dedicate your laptop GPU to TensorFlow only, on Ubuntu 18.04. | by Manu NALEPA | Towards Data Science

Setting tensorflow.keras.mixed_precision.Policy('mixed_float16') uses up almost all GPU memory - Stack Overflow

![tensorflow ResourceExhaustedError (see above for traceback): OOM when allocating tensor with shape [256,256,15,15] and type float on error (insufficient memory error) - Code World tensorflow ResourceExhaustedError (see above for traceback): OOM when allocating tensor with shape [256,256,15,15] and type float on error (insufficient memory error) - Code World](https://img2018.cnblogs.com/i-beta/1476081/201911/1476081-20191107184820177-1435194724.png)

tensorflow ResourceExhaustedError (see above for traceback): OOM when allocating tensor with shape [256,256,15,15] and type float on error (insufficient memory error) - Code World

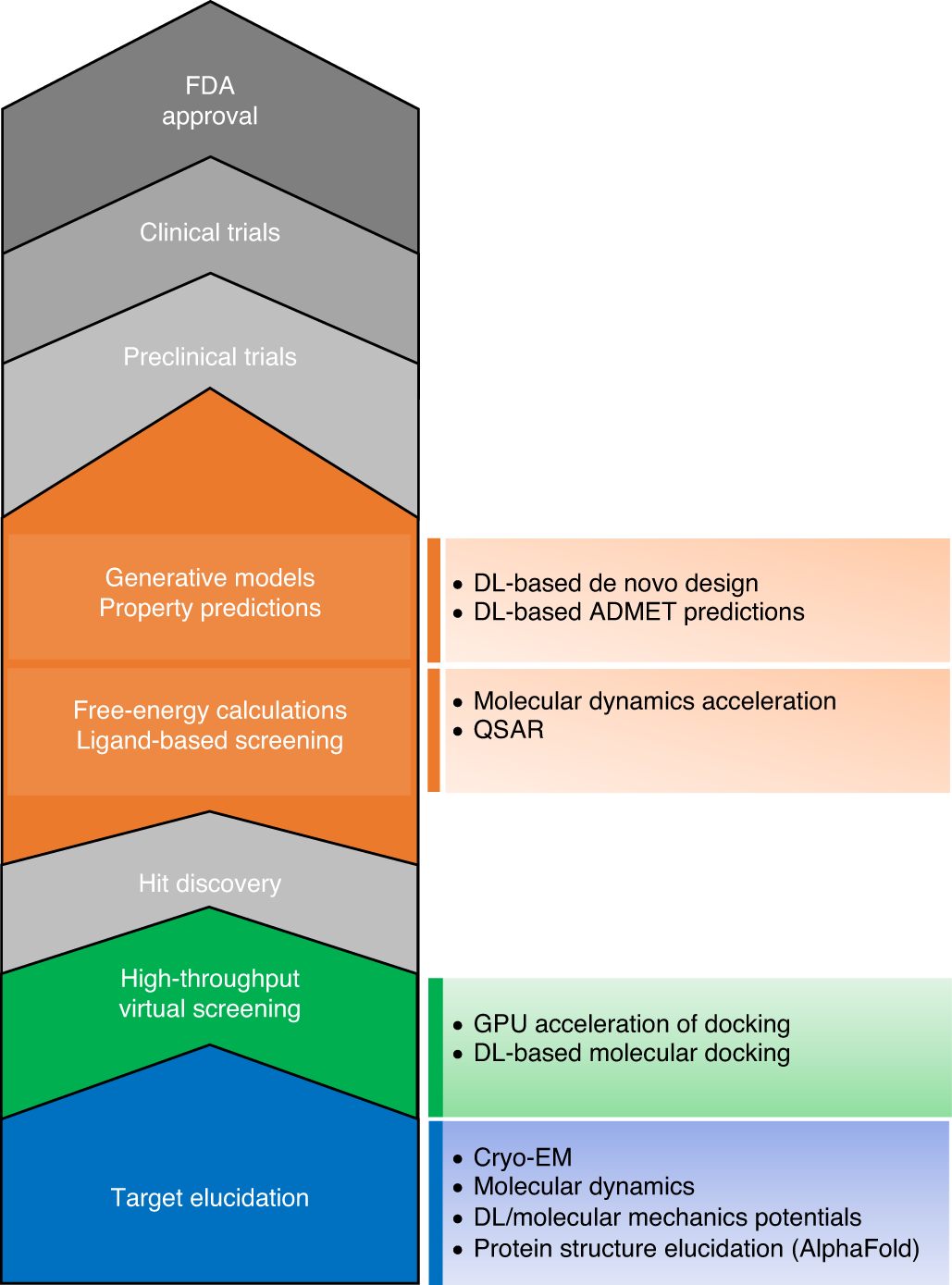

The transformational role of GPU computing and deep learning in drug discovery | Nature Machine Intelligence

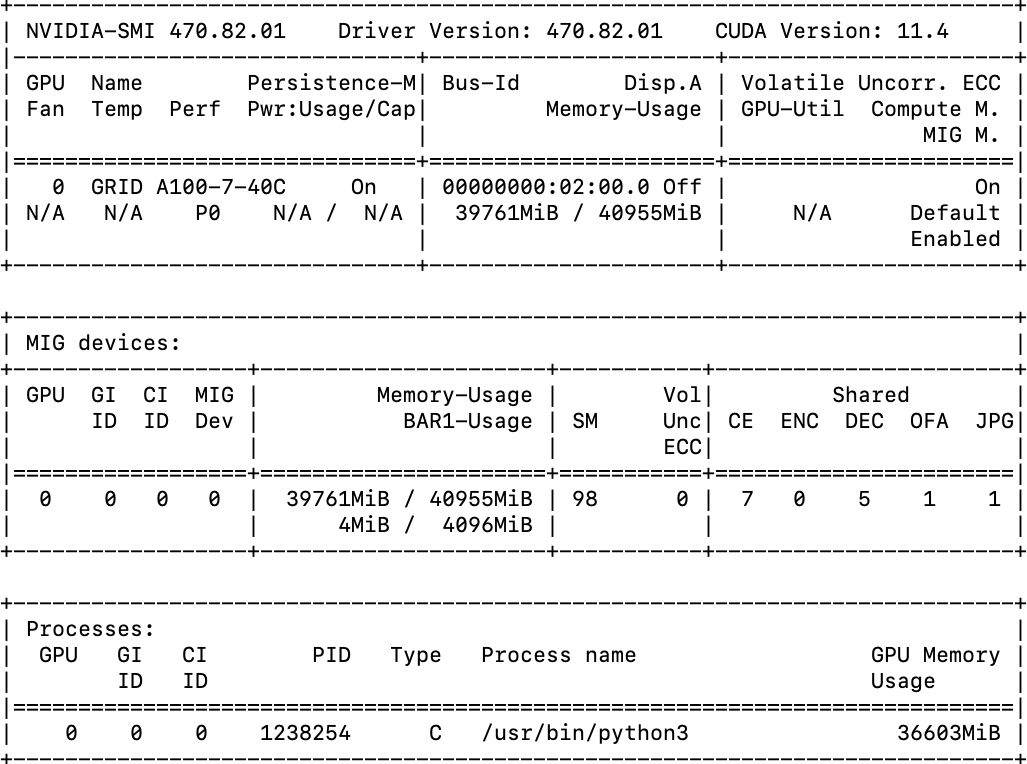

Memory Hygiene With TensorFlow During Model Training and Deployment for Inference | by Tanveer Khan | IBM Data Science in Practice | Medium

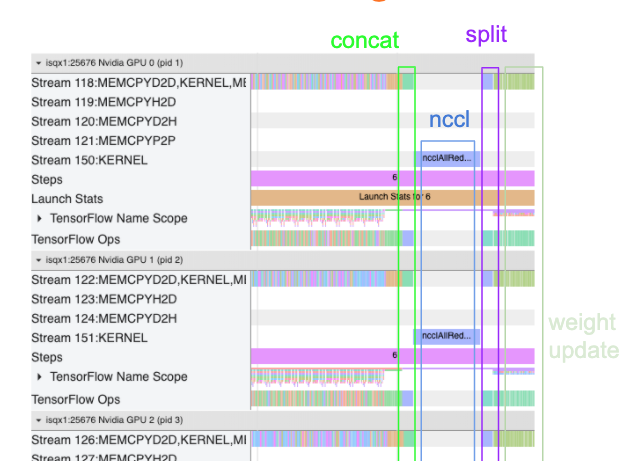

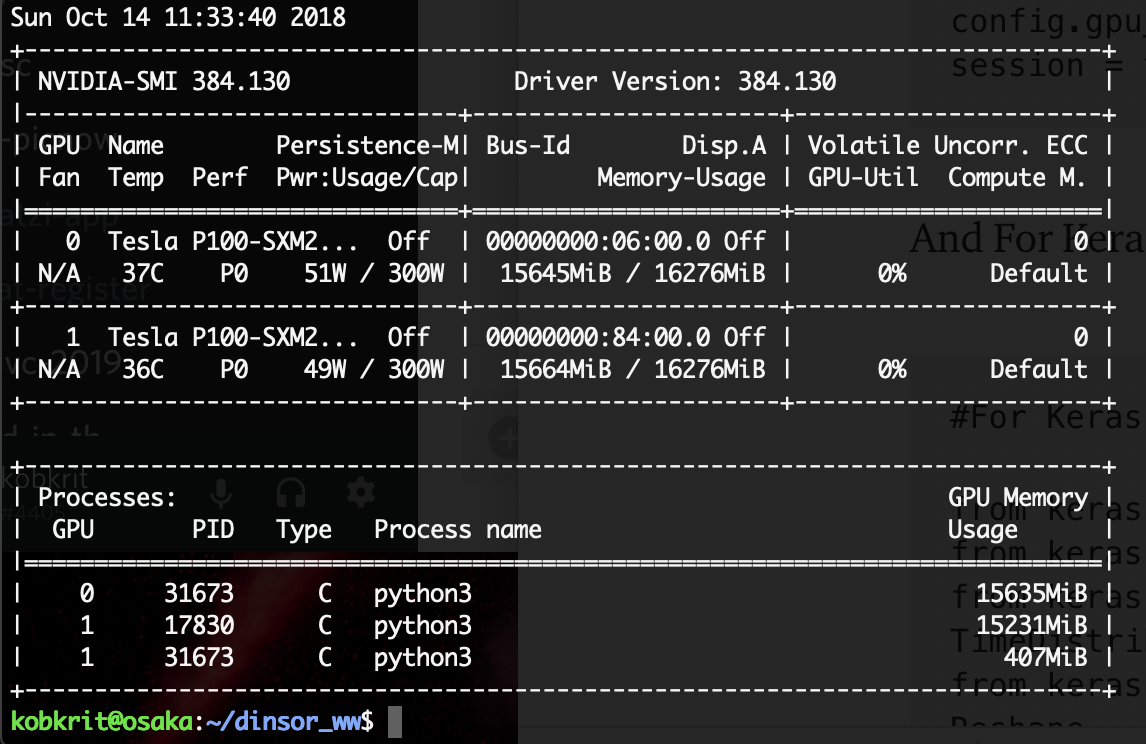

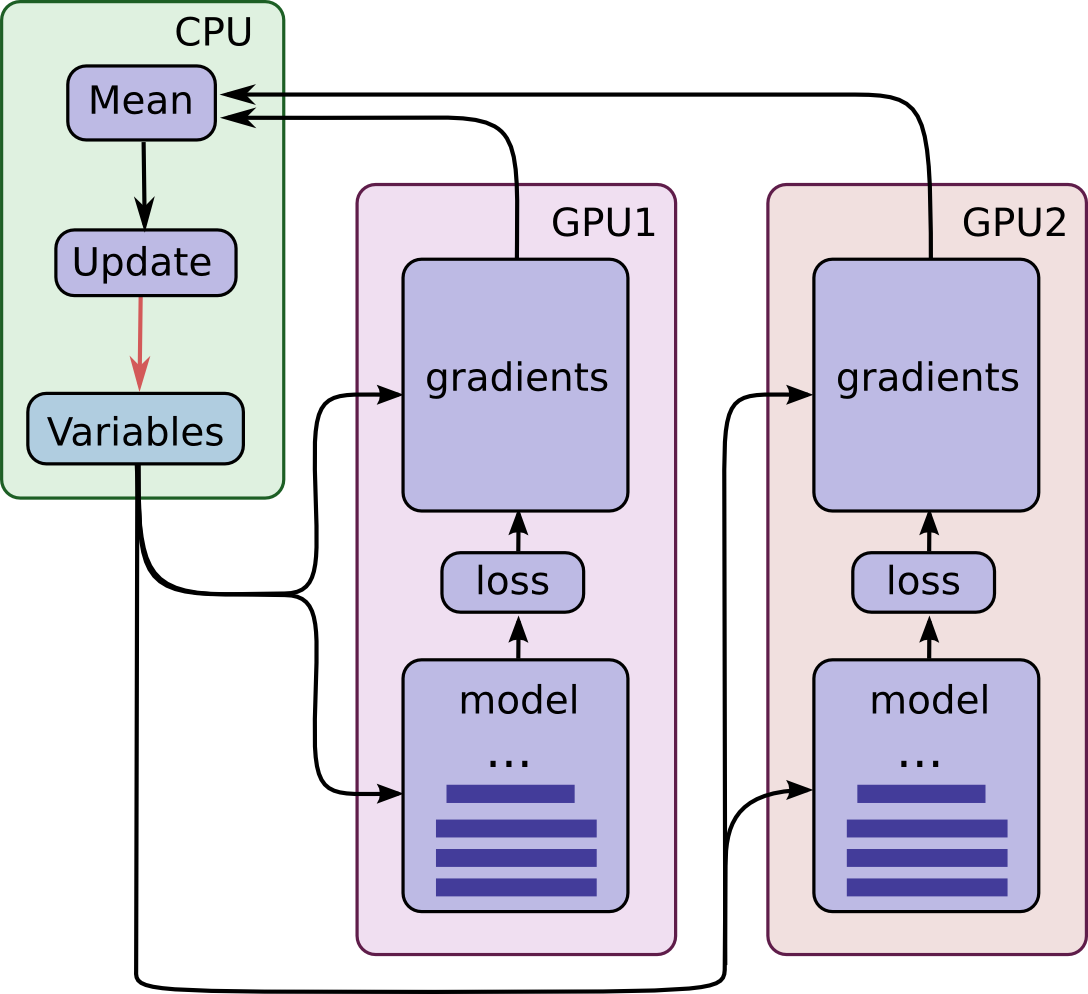

Training of scScope on multiple GPUs to enable fast learning and low... | Download Scientific Diagram

GPU Memory Size and Deep Learning Performance (batch size) 12GB vs 32GB -- 1080Ti vs Titan V vs GV100

![Solved] Could not create cudnn handle: CUDNN_STATUS_INTERNAL_ERROR | ProgrammerAH Solved] Could not create cudnn handle: CUDNN_STATUS_INTERNAL_ERROR | ProgrammerAH](https://programmerah.com/wp-content/uploads/2021/09/e4a5b67bdee3488e939ba63f02775f6d.png)